Published 21:51 IST, July 7th 2024

Why AI may fail to unlock the productivity puzzle

AI is a potential breakthrough, BlackRock CEO Larry Fink claims it will transform margins across sectors.

- Companies

- 5 min read

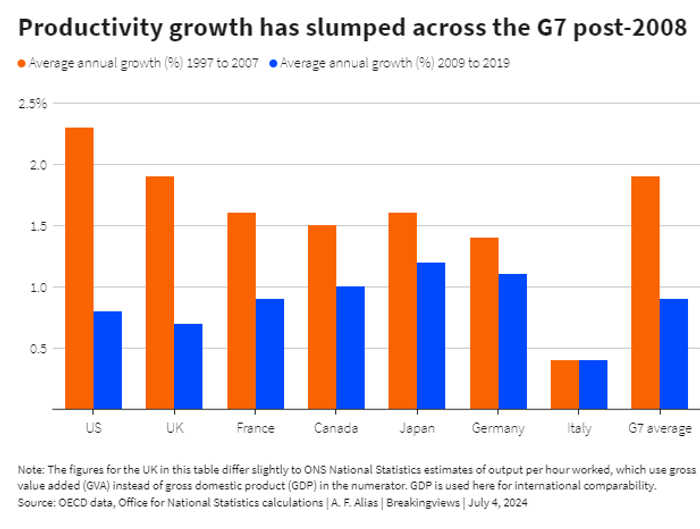

Puzzle trouble. The world’s advanced economies are in the grip of a prolonged productivity crisis. In the decade following the financial crisis of 2008, the growth of output per hour worked in the Group of Seven rich countries slumped to less than 1% a year – less than half the rate of the decade before. This dismal performance is the single biggest economic problem facing the developed world – as well as the root of much of political and geostrategic angst.

Artificial intelligence is a potential breakthrough. BlackRock CEO Larry Fink claims it will “transform margins across sectors”. Goldman Sachs predicts it will boost productivity growth by up to 3 percentage points per year in the United States over the next decade. The McKinsey Global Institute says it could add up to $26 trillion to global GDP.

Investors should beware of the hype. Four features of AI suggest that while its impact on the bottom line of some companies may be positive, its economy-wide consequences will be less impressive. Indeed, self-teaching computers may make the productivity crisis worse.

Start with AI’s impact on the most fundamental driver of modern economic growth – the accumulation of new scientific knowledge. Its prodigious predictive powers have enabled notable advances in certain data-heavy areas of chemistry and biology. Yet the potential of science to generate useful knowledge relies on its ability not just to predict what happens, but to explain why it does so.

Ancient Babylonians, for example, were no slouches when it came to predicting astronomical phenomena. Yet they never developed an understanding of the laws of physics that explain why these events occur. It was only with the discovery of the scientific method — constructing explanatory theories and subjecting them to experimental tests — that scientists began to grasp how the universe works. It is that ability to understand as well as predict that enables modern scientists to land a man on the moon — a feat of which their Babylonian ancestors could only dream.

AI models are digital Babylonians, rather than automated Einsteins. They have revolutionised the ability of computers to identify useful patterns in huge datasets, but they are incapable of developing the causal theories needed for new scientific discoveries. As University of California computer scientist Judea Pearl and coauthor Dana Mackenzie put it in their 2018 best-seller “The Book of Why”: “Data do not understand cause and effect: humans do.” Without causal reasoning, AI’s predictive genius will not be making human scientists redundant.

A second argument made for AI is that it will reduce corporate costs by automating much more basic knowledge work. That is a more plausible claim, and there is early evidence in its favour. One recent study found that introducing AI-powered chatbots helped customer support functions resolve 14% more issues per hour. The caveat is that the likely aggregate impact of such efficiency improvements is surprisingly modest.

Daron Acemoglu of the Massachusetts Institute of Technology estimates that 20% of current labour tasks in the United States could be performed by AI, and that in around a quarter of those cases it would be profitable to replace humans with an algorithm. Yet even if this replaces nearly 5% of all work, Acemoglu calculates that broad productivity growth would increase by only around half a percentage point over 10 years. That is barely a third of the ground lost since 2008.

Any recovery in economic dynamism would be welcome. The third challenge, however, is that in an important class of cases, embracing AI may send productivity gains into reverse.

Some of the technology’s early successes have come in its application to games. In 2017, for example, Google DeepMind’s AlphaZero programme stunned the world by demolishing even its most advanced computer rivals at chess. This highlighted the potential to deploy AI’s strategic nous in other competitive settings such financial trading or digital marketing. The snag is that in real life – unlike in games – the other players can also invest in AI. The result is that spending which may be rational for any individual company is collectively self-defeating. An AI arms race will ramp up costs but leave overall revenues unchanged.

The history of quantitative investing provides a cautionary tale. In the early 1970s, investors first identified systematic factors such as value and momentum, and the few firms willing to spend money on statistical research enjoyed super-normal returns. By the end of the decade, however, their competitors were running the numbers too. The excess returns were competed away, but everyone continued to incur the costs.

The same self-defeating dynamic will apply elsewhere as well. In the analogue world, the dirty secret of advertising was that it is often a race to stand still. In one of Harvard Business School’s most famous teaching cases, David Yoffie studied the so-called “cola wars” waged by Coca-Cola and PepsiCo between 1975 and the mid-1990s. Between 1981 and 1984, Coke doubled its advertising spend. Pepsi responded by doing the same. The net result was almost no change in the two companies’ relative market share, achieved at higher cost all round. In the age of digital marketing, AI risks bringing the cola wars to every corner of the economy.

That implies a fourth feature of AI which will deliver a more insidious blow to productivity. If an AI arms race makes massive capital investment the table stakes just to maintain market share, smaller players will inevitably be squeezed out. Industries will tend towards oligopoly. Competition will tend to decrease. Innovation will suffer – and productivity will slump even more.

In 1987, the Nobel Prize-winning economist Robert Solow bemoaned the fact that “you can see the computer age everywhere but in the productivity statistics." The effects of AI may soon be all too evident – just not in the positive way the technology’s advocates expect.

Updated 21:51 IST, July 7th 2024